Chris Reynolds: Did the Hype Associated with Early AI Research Lead to Alternative Routes Towards Intelligent Interactive Computer Systems Being Overlooked? Paper 1 – Paper 2

Artificial Intelligence research has involved chasing one heavily funded and overhung paradigm after another. The study of commercially unsuccessful projects can tell you not about the economic and political environments, but which projects should get funded and which should be abandoned.

Now what I’m talking about is a project which I got involved with [designing interactive language called CODIL] and which got abandoned. With my background, as a long-retired project leader, looking through the archive of this project I can see it simply doesn’t correspond to the fashionable paradigm.

I was effectively told that when getting publicity, I should produce a working demonstration and an educational package on the BBC Micro.

And I got rave reviews: New Scientist, Psychology Today and in prime educational supplements and I pinned them on the noticeboard.

But I was told: ‘Take them down, you are bringing the department into disrepute. AI is all about getting grants for big, big, big computers’.

At the time I was dealing with the problem of a family suicide and decided I would take early retirement rather than have a complete mental breakdown. And that was why the project ended.

To say a bit more, myself, I’m neurodiverse, autism when I was at school although it was diagnosed in those days and I recently discovered I have aphantasia, which means I can’t recall visual images. Which means that when they were saying AI is all about pattern recognition and reading text, it passed completely over me.

Complex Systems

I did, however, get involved in complex systems. The first one was for a PhD studying chemistry with some pretty horrific maths and one of the real problems was the theoreticians didn’t understand experimental chemistry and the experimental chemists didn’t understand theoretical chemistry. And they all liked to quote each other and show how clever they were.

That got me interested in scientific information and in 1962 I started as a graduate level clerk handling mail (reading research and development correspondence) in an international organisation. In effect I was acting as a human chatbot.

I was archiving, answering questions and writing reports on the mail that was coming in. And I thought that computers might help. I knew nothing about them but I came across the work of Vannevar Bush who in 1946 wrote an article As We May Think and I decided to explore how computers could help. I was also interested in the money as I wasn’t getting paid very well. I then moved to what I now know was one of the most complex sales accounting systems anywhere in the UK in the mid-1960s on LEO III.

I suggested that the opaque, very complicated invoicing system for a million ever-changing sales contracts could be reformatted in a sales-friendly transparent form when terminals became available. And I suggested we design a computer that could do but wasn’t taken seriously.

However, this stage in my career I was headhunted to work on the future requirements for the next generation of large commercial computers which would have terminals and hopefully integrated management information systems by a member of the LEO team who knew a lot about why the Shell and BP systems wouldn’t work.

Proposal for an experimental human-friendly electronic clerk

This research resulted in a proposal for an experimental human-friendly electronic clerk using an interactive language called CODIL (Context Dependent Information Language). It would handle a wide range of commercial, data-based and orientated tasks.

The main requirement was that the system should be self-documenting and 100% transparent and could work symbiotically with human staff.

And maybe because I was neurodiverse I didn’t think I was proposing anything unusual, it seemed obvious to me at the time. It was funded by David Caminer and John Pinkerton. And work started in writing the computer simulation to show that the thing worked and it did work to the level of the original proposals.

However, there was a problem. ICL was born, and if any of you know anything about ICL, Caminer and Pinkerton got pushed to one side in the process and I was in one of the research divisions that was closed down.

However, I was given the permission to take the research elsewhere. I still haven’t realised why what I was doing was novel. What I urgently needed to do was get away from technology and move into what is called the human fields. And I chose in 1971 to go to Brunel University, which had just stopped being a technical college. I had no experience of unconventional research and which was not dedicated to selling the standard technology.

Not corresponding to conventional AI

And the research continued and got very favourable reviews, but I was told by a new head of department that it didn’t correspond to conventional AI, so it must be wrong. And that’s when I left at the time of a family suicide.

Why I’m here is because a few years ago my son said when you go I’ll have to get a skip for all that paperwork which takes up a whole side of the garage and includes program listings, correspondence etc etc and the report which led to the research being closed down by ICL.

And I thought rather than throw it in a skip, I would get back into AI and see where it fitted in. And basically what I did was to discover a packet which I had suggested for invoicing and later moved on was an unusual type of language.

If you take Cobol as a language it maps the task into a digital array. What my system did, and I’d never heard of neuro networks in those days, was map the data and the rules into one language, which was mapped onto an array. And if you said to the array here are the active nodes, the answer fell out. It may seem rather simple and that’s the problem, it sounds too simple. I don’t think anybody had looked at something so simple but it did work.

Turing Test and the Black Box

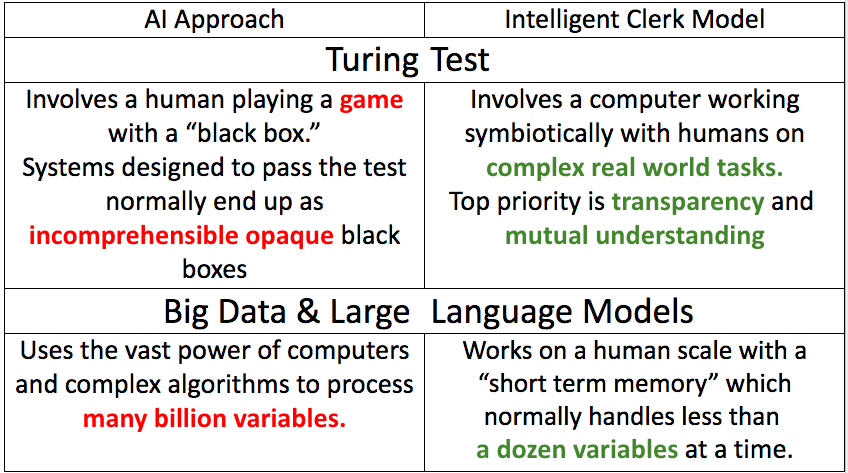

Subsequently I have been looking at standard AI against my intelligent clerk model and the Turing Test. It’s a lovely test and it sets up a black box and if you build a system to meet the Turing Test, you end up building a black box. That is the target. Now if I started off thinking I’ve got a company in a very complex area, everything always changing, then what I’d want is a transparent system.

And I started off by saying I don’t give a damn about the rules, whatever it does it must do transparently, I must always be able to explain.

If you wanted the CPU to address things associatively, you can make a friendly computer. Because the symbolic assembly language of the computer can be mapped in a similar way that humans think.

So, in my recent re-examining of the CODIL project archive in the light of modern AI research, I have found the result suggests that the CODIL approach unconsciously involved (perhaps because I am neurodiverse) reverse engineering the way the human short term memory works.

A key feature was that the language involved describing the task in term of sets, where each set was a node in an associatively addressed network. The system I created said the most important thing is that a human must be able to understand what the system does. I hadn’t read the appropriate psychology papers at the time, so when I set up testing, I said ‘nobody can handle 32 variables in their head simultaneously, But when I started testing I found that with everything you did you didn’t need to have active. Active is the critical word, more than above ten variables and usually less in human short-term memory.

This is radically different to normal programming where the task is split the task into rules and data, which are mapped onto a digitally addressed array of numbers. The underlying story is that the project archives show that there is a possibility that modern AI is going up a blind alley by neglecting brain modelling. The archive also contains much information relating to the lack of adequate support for creative blue-sky research in a rapidly developing field where there is a ferocious rat race to get funding.

I am now writing up a theoretical paper and I’m also looking at how this information should be archived

Panel disicussion and questions

Mike Short: A question to Vassilis. You didn’t really refer to the language of security and how that changes over time. You perhaps didn’t refer to some of the areas that are now being described as the metaverse. How would those two subjects fit in with your frameworks?

Vassilis: So, the metaverse feeds into the aspect about human computer interaction and virtual reality. And something I spoke earlier regarding blockchain and digital object identification.

Security I haven’t really looked at to be honest but I think it’s an important factor that shapes, these linguistic choices.

Obviously the term itself is used in order to express different things. So, customer security, national security. There’s a vast space between them and one sometimes justifies the other.

Speaking of Jon’s talk, this idea that technology has to be applied, if it’s science it feeds into that security and is used as an applicable source. And partly in response, I think Lighthill comes straight after 1969 and Manfield Amendment in the United States post-Vietnam War were likewise in the States all technology, all DARPA funding had to be applied in terms of security.

Chris: Basically, as far as I was concerned there were always black box areas. I was responsible for a time with correspondence with South Africa, with Australia and such like and I have no first-hand experience of farming in any of those areas.

And I think this is an important thing because quite often with black boxes and what’s inside, there’s always going to be something you don’t know.

The important thing I think with humans and the way they handle it is they can handle things they know and they are prepared for things that they don’t know.

And I think this is one of the problems with the connectionist approach.

The system I’ve got you can look at it in that way but I’m quite sure that human brain does not use advanced mathematical statistics to work out the connections.

And I think this is part of the reason why these new AI are black boxes because they use mathematical techniques, which maybe very, very valuable for some tasks, don’t get me wrong, the modern AI and big data is very, very powerful.

I’m using one of the chatbots at the moment in terms of the research I’m doing. It comes back with ideas that I may not necessarily agree with but what it does tell me is what kind of opposition I’m against with my idea and that is very important if you’re trying to be creative.

Question from a man in the audience (James? 2.54.320): A comment for Jon about the Lighthill report, which I’ve managed to have a look that myself. I think one of the analysis at the time was that his views were shaped by the extreme political differences among the various protagonists in AI.

The question I wanted to ask though is about the [why he had such views on] building robots, which is somewhat ironic given that robots today are probably one of the major developments in industrial automation and have transformed the world?

Jon: Yeah, absolutely. And it doesn’t even make a lot of sense calling it building robots in the context of how Lighthill then goes on to describe that category, actually quite a mixed bag of quite a few areas of research and activities. It maybe that it one of the main targets was Edinburgh where some of the main robot work was going on.

Man in the audience (James?): And he used very colourful language too, I remember, ‘pseudo maternal drive’.

Jon: Oh yes, yes, yes, yes, where he speculates what might be driving interest in general intelligence. And one of them is about supposedly men being jealous of the possibility of giving birth.

James, I’m delighted you’re here because your work was really important and I think in that paper you also say that perhaps Lighthill was motivated by either that sort of humanistic dislike or scepticism or concern or anxiety about artificial life and artificial intelligence.

That could be part of a cultural response but I don’t think it works for Lighthill himself. I think you can pin him down throughout his career from when he was at Farnborough to when he sets up the Institute for Mathematics and its Applications and throughout his career to good science and good mathematics.

No comments:

Post a Comment